Service Management

Management of Robots.txt

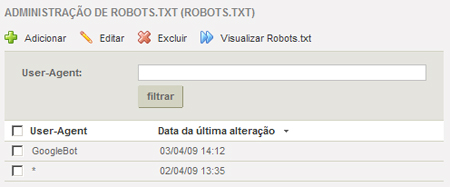

The Robots.txt service has an administrative environment for adding configurations of user-Agents that will compose the robots.txt.

It is important to emphasize that this environment does not allow the creation of robots.txt but rather the addition of information about which user-Agents can access the robots.txt of a specific service instance for a specific solution.

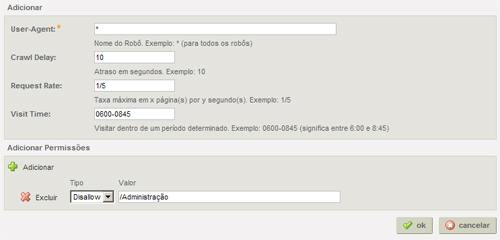

To add configurationssimply click on Add and fill in the following information:

- UserAgent :name of the user-Agent that will compose the robots.txt.By providing "*" for this field, you are indicating that any user-Agent can access this robots.txt;

- Crawl Delay: the interval in seconds that the user-Agent of the search engine will request a page, once the robots.txt is read;

- Request Rate: number of pages per so many seconds that the user-Agent of the search engine will navigate and index a page;

- Visit Time: the time interval during which the request can be made;

- Add Permissions: it is possible to add as many access permissions for different page paths as needed. Therefore, for each path of a page, you can assign either allow or disallow. Generally, a list of paths that are "disallow" is defined. If you want some pages under certain paths that are not allowed to be permitted, just configure them as "allow".

It is possible and necessary to inform the URLS of the sections and pages that are not available for navigation through links and menus. Such URLs are available through sitemaps that must be registered so that the robots.txt can consult them.

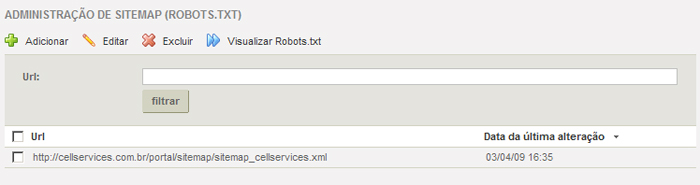

Management of Sitemap

In this management, it is possible to provide the sitemaps contained in the robots.txt so that the user-Agent can navigate the pages that are not accessed through normal navigation in a solution.

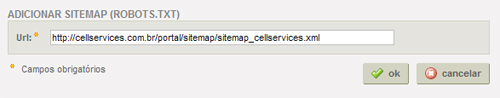

To provide the sitemaps (one at a time) that the robots.txt will use, you must click on Add and fill in the following information:

- URL:You must provide the URL formed during the registration of sitemaps in the desired service instance of sitemap. Remembering that the URL is formed by: URL of the solution/sitemap/generated_xml_file_of_sitemap.

Through the sitemap management of the robots.txt you can also view the composition of the robots.txt already with the information of the sitemaps (if any).

```